Strategies for Addressing Batch Variation in Autologous Cell Therapy Manufacturing

This article provides a comprehensive guide for researchers and drug development professionals on managing batch variation in autologous cell products.

Strategies for Addressing Batch Variation in Autologous Cell Therapy Manufacturing

Abstract

This article provides a comprehensive guide for researchers and drug development professionals on managing batch variation in autologous cell products. It explores the foundational causes and impacts of batch effects, evaluates current methodological and computational correction approaches, offers troubleshooting and optimization strategies for manufacturing, and discusses validation frameworks and comparative analyses for ensuring product quality and regulatory compliance. The content synthesizes the latest research and technological advancements to address a critical challenge in scaling personalized cell therapies.

Understanding Batch Effects: Sources and Impact on Autologous Product Quality

Defining Batch Variation in the Context of Autologous Therapies

Frequently Asked Questions

What is batch variation in autologous cell therapies? Batch variation refers to the inherent differences that occur between individual production runs (batches) of autologous cell therapies. Since each batch starts with cells from a different patient, variability arises from patient-specific biological factors combined with technical manufacturing differences. This contrasts with allogeneic therapies where a single donor source is used for multiple patients [1] [2].

Why is batch variation particularly challenging for autologous therapies? Batch variation is especially problematic because each patient's cells behave differently during manufacturing. A process that works with high yield for one patient's cells may fail completely for another. This variability directly impacts patient access to treatment, as failure to manufacture a viable product can be life-threatening for patients with no alternative options [2].

What are the primary sources of batch variation?

- Patient Biological Variability: Disease severity, prior treatments (chemotherapy, radiation), age, genetic factors, and overall health status [2]

- Starting Material Collection: Differences in apheresis protocols, collection devices, operator training, and anticoagulants used [1] [2]

- Raw Materials: Variability in reagents, media, plasmids, viral vectors, and other process components [1]

- Manufacturing Process: Differences in cell growth kinetics, transduction efficiency, and handling conditions [1]

How can I detect and measure batch effects in my dataset? Several computational methods can identify batch effects in omics data:

- Visualization: PCA, t-SNE, or UMAP plots showing samples clustering by batch rather than biological source [3] [4]

- Quantitative Metrics: Normalized mutual information (NMI), adjusted rand index (ARI), kBET, or PCR_batch [3]

- Statistical Analysis: Differential expression analysis comparing batches to identify batch-correlated features [5]

Troubleshooting Guides

Problem: High Failure Rates in CAR-T Manufacturing

Symptoms: Inconsistent transduction efficiency, variable cell expansion rates, failure to meet release specifications for some patient batches.

Possible Causes and Solutions:

| Cause | Solution | Reference |

|---|---|---|

| Patient prior treatments affecting cell health | Implement stricter patient eligibility criteria or adapt process parameters | [2] |

| Variable apheresis material quality | Standardize collection protocols and operator training across sites | [2] |

| Inconsistent raw material quality | Use GMP-grade, compendial materials with quality agreements with vendors | [1] |

| Uncontrolled process parameters | Implement process analytical technologies for real-time monitoring | [2] |

Problem: Batch Effects in Single-Cell RNA Sequencing Data

Symptoms: Cells clustering by batch rather than cell type or biological condition in dimensionality reduction plots.

Detection and Correction Workflow:

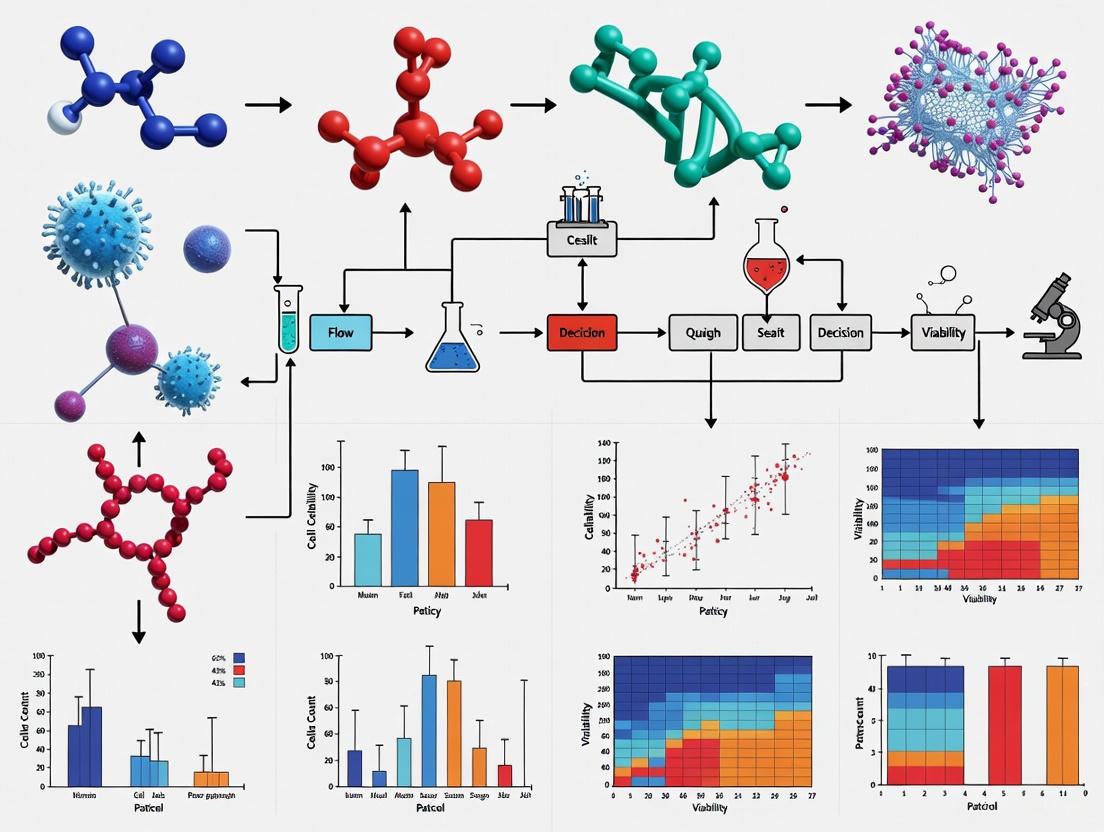

Batch Effect Analysis Workflow

Common Correction Algorithms:

| Method | Principle | Best For | |

|---|---|---|---|

| Harmony | Iterative clustering with correction factors | Large datasets, fast runtime | [3] [4] |

| Seurat CCA | Canonical correlation analysis with MNN anchoring | Well-balanced sample types | [3] [4] |

| scANVI | Variational autoencoder with Bayesian modeling | Complex batch structures | [4] |

| MNN Correct | Mutual nearest neighbors in gene expression space | Similar cell type compositions | [3] |

Avoiding Overcorrection: Monitor for these signs of overcorrection: distinct cell types clustering together, complete overlap of samples from very different conditions, and cluster-specific markers comprising ubiquitous genes like ribosomal proteins [3] [4].

Problem: Inconsistent Potency in Final Products

Symptoms: Variable functional performance in potency assays, inconsistent cytokine secretion profiles, differing target cell killing efficiency.

Quality Control Framework:

Quality Control Testing Framework

Experimental Protocols and Data Analysis

Protocol: Harmonized Quality Control Testing for Academic CAR-T Production

This protocol follows recommendations from the UNITC Consortium for standardizing QC testing [6]:

Mycoplasma Detection

- Use validated commercial nucleic acid amplification kits

- Validate detection limits for at least 10 CFU/mL

- Test both cell suspensions and culture supernatants

- Ensure compatibility with production timeline (alternative to 28-day culture method)

Endotoxin Testing

- Employ Limulus Amebocyte Lysate (LAL) or Recombinant Factor C (rFC) assays

- Validate protocols to prevent matrix interference

- Follow European Pharmacopoeia guidelines

Vector Copy Number (VCN) Quantification

- Use validated qPCR or ddPCR techniques

- Establish acceptable range per cell (balance efficacy vs. safety)

- Monitor for risk of insertional mutagenesis

Potency Assessment

- Implement IFN-γ ELISA following antigenic stimulation

- Include flow cytometry for immunophenotyping

- Perform functional assays (cytokine release, cytolytic capacity)

Quantitative Data on Batch Variation Effects

Table 1: Impact of Culture Conditions on BMSC Properties [7]

| Parameter | FBS Expansion | hPL Expansion | Significance |

|---|---|---|---|

| Proliferation Rate | Baseline | Significantly Increased | p < 0.05 |

| Gene Expression Trajectories | Distinct patterns | Different distinct patterns | Significant |

| Phenotype Markers | Canonical fibroblastic | Different signature | Significant |

| Chondrogenic Function | Decreased over time | Maintained over culture | p < 0.05 |

| Clotting Risk | Increased over time | Lower risk | p < 0.05 |

Table 2: iMSC Batch Variability in Osteoarthritis Model [8]

| Batch | Differentiation Capacity | EV Anti-inflammatory Effects | Senescence |

|---|---|---|---|

| SD1 | Variable between batches | Prolonged activity | Reduced vs. primary |

| SD2 | Variable between batches | Prolonged activity | Reduced vs. primary |

| SD3 | Variable between batches | Prolonged activity | Reduced vs. primary |

| Primary MSCs | Consistent but declines | Diminished by passage 5 | Increased over time |

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for Managing Batch Variation

| Material | Function | Quality Considerations |

|---|---|---|

| GMP-grade Media & Reagents | Cell culture expansion | High purity, compendial grade, produced under GMP [1] |

| Human Platelet Lysate (hPL) | FBS substitute for expansion | Standardized pooling, ABO blood group matching [7] |

| Validated QC Kits | Mycoplasma, endotoxin testing | Pharmacopoeia compliance, validated detection limits [6] |

| Clinical-grade Viral Vectors | Genetic modification | Consistent titer, purity, minimal empty capsids [9] |

| Automated, Closed Systems | Standardized manufacturing | Reduced operator variability, controlled environment [6] |

Key Risk Mitigation Strategies

Implement Flexible Processing: Design manufacturing processes that can accommodate variable growth kinetics while maintaining GMP requirements [2].

Strategic Raw Material Sourcing: Establish quality agreements with vendors, conduct incoming testing, and avoid sole-source materials when possible [1].

Comprehensive Analytics: Develop multivariate testing strategies using process analytical technologies for real-time monitoring and control [2].

Standardization Where Possible: Harmonize protocols across sites for cell collection, isolation, and processing while maintaining flexibility for patient-specific variations [6] [2].

Early Donor Variability Assessment: Intentionally introduce donor variability during process development to understand critical quality attributes [2].

This technical support center article provides troubleshooting guides and FAQs to help researchers identify and manage key sources of variability in autologous cell product manufacturing.

FAQs: Understanding and Troubleshooting Variability

1. What are the primary donor-related factors that cause variability in autologous cell therapies? The patient is the primary driver of variability. The cell product will always reflect the donor's condition at the time of collection [10]. Key factors include:

- Clinical Indication and Disease Status: Patients with different cancers (e.g., CLL, lymphoma, ALL) present with vastly different baseline blood counts and lymphocyte levels, directly impacting the starting material's cellular composition [10].

- Treatment History: Prior treatments, especially years of cytotoxic chemotherapy, can affect the quality and fitness of a patient's T cells [10].

- Patient Demographics: Age and other fixed factors can influence cell quality [10].

2. How do raw materials contribute to batch-to-batch variation? Cell culture media and other raw materials are a significant source of variability that can detrimentally affect cell growth, viability, and product quality [11]. This is due to:

- Complex Composition: Chemically defined media have many components, and minor differences in their concentrations can alter cell metabolism and function [11].

- Supply Chain Inconsistency: Variations in the production and sourcing of raw materials, including media, reagents, and cytokines, can introduce variability [12] [13].

- Mitigation Strategy: Employ rigorous raw material testing and quality control protocols. Using standardized, GMP-grade reagents helps reduce this risk [13].

3. What analytical challenges make it difficult to characterize variability? A major challenge is the lack of universal standardized assays [12]. Variability in the quality control methods themselves—such as assessments of viability, potency, and purity—can lead to differences in how cell characteristics are measured and interpreted [14]. Implementing advanced analytical techniques and process monitoring can help identify and mitigate these sources of variability [14].

4. How can the cell collection process itself introduce variability? For autologous therapies, the apheresis collection process is a key source of variation [10] [15].

- Product Contamination: Apheresis instruments have limited ability to resolve cell types. If blood flow is interrupted, the product can be contaminated with platelets, granulocytes, or red blood cells, which can inhibit T cell proliferation later in manufacturing [10].

- Lack of Standardization: Differences in equipment, collection processes, and freezing techniques across apheresis facilities contribute to variability in the starting material [14] [15].

5. Why is demonstrating comparability so challenging after process changes? Cell and gene therapies are complex living products. Even minor alterations to a manufacturing protocol can have a large impact on the final product's critical quality attributes (CQAs) [10] [16]. Regulatory bodies emphasize that demonstrating comparability requires extensive analytical characterization, stability testing, and a risk-based approach to show that process changes do not impact safety or efficacy [16] [13].

Troubleshooting Guides

Guide 1: Managing Donor-Lot Variability in Apheresis Starting Material

Problem: Significant batch-to-batch variability in the yield, purity, and cellular composition of apheresis material collected from different patients.

Investigation and Resolution:

- Step 1: Correlate with Patient Clinical Data. Review the patient's most recent complete blood count (CBC) and treatment history. Patients with lymphoma often have lymphopenia, leading to low T cell collections, while those with CLL may have lymphocytosis [10].

- Step 2: Analyze the Mononuclear Cell Product. Perform a full immunophenotype analysis (e.g., flow cytometry) on the apheresis product. Determine not just the CD3+ T cell count, but also the levels of contaminants like monocytes, B cells, and granulocytes [10].

- Step 3: Adapt the Manufacturing Process. Based on the starting material's profile, consider process adaptations. For products with high monocyte contamination, implement additional T cell enrichment or purification steps. Understand that achieving higher purity often comes at the cost of a lower yield, so find the optimal balance [10].

Guide 2: Controlling Variability from Cell Culture Media

Problem: Inconsistent cell growth, viability, or metabolic profiles between batches, suspected to be caused by media variability.

Investigation and Resolution:

- Step 1: Characterize the Media. Use advanced analytical techniques like in-depth liquid phase separations, mass spectrometry, and spectroscopic methods to characterize the media's composition and identify the root cause of variability [11].

- Step 2: Audit Your Supply Chain. Secure a reliable supply of GMP-grade raw materials and media. Implement strategic partnerships and supply chain management strategies to ensure consistency [13].

- Step 3: Implement Robust QC. Employ rigorous quality control for all incoming raw materials. This includes in-process testing and real-time release criteria to ensure the stability and reliability of the culture system [13] [11].

Table 1: Impact of Clinical Indication on Apheresis Product and Manufacturing

| Clinical Indication | Typical Peripheral Blood Profile | Impact on Apheresis Product | Downstream Manufacturing Effect |

|---|---|---|---|

| Chronic Lymphocytic Leukemia (CLL) | Lymphocytosis (increased lymphocytes) [10] | High total mononuclear cell count [10] | Requires careful purification; potential for high non-T cell contaminants [10] |

| Lymphoma | Lymphopenia (low lymphocytes) [10] | Low total mononuclear cell count; wide variation in CD3+ T cell percentage [10] | Lower manufacturing success rate; challenges in achieving target cell numbers [10] |

| Acute Lymphocytic Leukemia (ALL) | Varies by patient and disease stage | High total mononuclear cell count; wide variation in CD3+ T cell percentage [10] | Can be unpredictable; depends on specific contaminating populations [10] |

Table 2: Key Research Reagent Solutions for Variability Control

| Reagent / Material | Function | Considerations for Variability Reduction |

|---|---|---|

| Chemically-Defined Media | Provides nutrients for cell growth and expansion [11] | Use GMP-grade, high-quality lots; perform qualification assays; avoid serum to reduce unknown variables [13] [11] |

| Cell Isolation Kits (e.g., MACS, FACS) | Isolates desired cell population (e.g., T cells) from a heterogeneous mixture [15] | Standardize protocols across operators and sites; validate recovery and purity for each cell type [10] [15] |

| Recombinant Cytokines (e.g., IL-2, IL-7) | Promotes T cell expansion and can alter cell phenotype [15] | Use consistent, GMP-grade sources; carefully control concentrations and timing of supplementation [15] |

| Viral Vectors | Delivers genetic material for cell engineering (e.g., CARs) [16] [15] | Treated as a critical starting material by regulators; requires extensive testing for titer, potency, and absence of replication-competent virus [16] |

| Cryopreservation Solutions | Protects cells during freezing, transport, and storage [15] | Standardize freeze/thaw rates and cryoprotectant concentrations; monitor for transient warming events during storage [10] [15] |

Experimental Protocols and Workflows

Protocol: A Risk-Based Approach to Managing Process Changes

Purpose: To guide researchers in assessing the impact of a manufacturing process change (e.g., new media formulation, altered expansion time) on product CQAs and determining the necessary testing to demonstrate comparability [16] [13].

Workflow:

- Define the Change: Clearly document the specific change being made to the process.

- Risk Assessment: Conduct a risk assessment to identify which CQAs (e.g., potency, identity, viability) are most likely to be impacted by the change [16].

- Analytical Testing Plan: Develop a tiered testing strategy. For high-risk CQAs, employ extended characterization methods. For lower-risk attributes, release assays may suffice [16].

- Execute Study: Manufacture batches using the old and new processes.

- Compare Data: Perform a side-by-side comparison of the CQAs from both batches. Use historical data as a reference, but prioritize direct comparison data [16].

- Conclusion: Determine if the products are comparable. If significant differences are found in a critical attribute, the change may not be acceptable.

Process Change Comparability Workflow

Protocol: Characterizing Cell Culture Media Variability

Purpose: To identify and quantify the root causes of variability in cell culture media using orthogonal analytical methods [11].

Methodology:

- Sample Preparation: Acquire multiple lots of the same media formulation (both liquid and powder, if possible).

- Orthogonal Analysis: Subject the samples to a suite of analytical techniques.

- Liquid Chromatography-Mass Spectrometry (LC-MS): For targeted and untargeted metabolomics to identify differences in nutrient and metabolite concentrations [11].

- Inductively Coupled Plasma Mass Spectrometry (ICP-MS): To measure trace element and heavy metal contamination [11].

- Spectroscopic Methods (e.g., NMR, NIR): To provide a fingerprint of the overall media composition [11].

- Data Correlation: Correlate the analytical data from the different media lots with functional performance data from cell culture experiments (e.g., growth rate, viability, specific productivity).

Media Variability Characterization Workflow

The Profound Impact of Batch Effects on Data and Clinical Outcomes

Frequently Asked Questions

What are batch effects and why are they a critical concern in autologous cell therapy? Batch effects are technical variations in data that are unrelated to the biological questions being studied. They can be introduced at virtually any stage of research or manufacturing, from sample collection and shipping to instrument changes and reagent lots. In autologous cell therapy, where each patient's cells constitute a unique "batch," these effects are particularly concerning as they can mask true biological signals, lead to incorrect conclusions, and even compromise product quality and patient safety. One study analyzing 456 batches of autologous natural killer (NK) cells found that transit time from medical institutions to processing facilities significantly influenced the proliferative potential of primary cells in the raw material [17].

How can I identify if my data is affected by batch effects? Multiple approaches exist for detecting batch effects, ranging from simple visual checks to quantitative algorithms:

- Dimensionality Reduction: Plot samples from different batches using UMAP or t-SNE. If batches form separate "islands" or show consistent offsets, batch effects are likely present [18] [19].

- Histogram Overlays: Overlay histograms of constitutively expressed markers (e.g., CD45, CD3) across batches. Misalignment of peaks suggests technical variation [19].

- Bridge Samples: Include a consistent control sample in each batch and track its measurements over time using Levy-Jennings charts. Shifts in the control's values indicate batch effects [18].

- Variance Analysis: Calculate the variance in median marker expression or gated population percentages across files. Higher variance suggests stronger batch effects [19].

What are the most effective methods for correcting batch effects in single-cell RNA sequencing data? A 2025 benchmark study compared eight widely used batch correction methods for scRNA-seq data. The results showed that many methods introduce measurable artifacts during correction. Among the methods tested, Harmony was the only one that consistently performed well across all tests without significantly altering the data. Methods such as MNN, SCVI, and LIGER often altered the data considerably, while ComBat, ComBat-seq, BBKNN, and Seurat also introduced detectable artifacts [20].

How can I prevent batch effects when designing a longitudinal flow cytometry study? Prevention is the most effective strategy for managing batch effects:

- Standardize Procedures: Ensure all personnel follow detailed, written protocols for sample collection, processing, and staining [18].

- Reagent Titration: Titrate all antibodies correctly for the expected cell number and type [18].

- Instrument QC: Run standardized beads or controls before each acquisition to ensure consistent detector performance [18].

- Experimental Design: Randomize samples from different experimental groups across acquisition sessions instead of running all controls one day and all treatments the next [18].

- Fluorescent Cell Barcoding: Label samples with unique fluorescent tags, pool them, and stain them together in a single tube to eliminate staining and acquisition variability [18].

Troubleshooting Guides

Problem: Inconsistent Growth Rates in Autologous Cell Manufacturing

Symptoms: Variable expansion rates of patient cells, final cell products failing to meet target cell numbers.

Potential Causes and Solutions:

- Cause: Variability in raw input material due to patient health status, degree of pretreatment, or apheresis collection procedures [21] [22].

- Solution: Request complete blood count (CBC) data from apheresis centers to understand the hematological composition of incoming material [21].

- Cause: Shipping conditions and transit time affecting cell viability and potency [17].

- Solution: Standardize shipping protocols across collection sites. Monitor transit time and its impact on specific growth rates, particularly in the early culture phase [17].

- Cause: Inconsistent reagent quality, particularly between lots of critical materials like fetal bovine serum [5].

- Solution: Implement rigorous reagent qualification and establish contractual quality agreements with suppliers to address root causes of variability [21].

Table 1: Impact of Transit Time on Autologous NK Cell Manufacturing [17]

| Clinical Site | Distance to CPF | Transit Time | Average Culture Period (days) | Average Specific Growth Rate (day⁻¹) | Batches <1×10⁹ cells |

|---|---|---|---|---|---|

| Clinic A (Tokyo) | 4 km | Same day | 21 | 0.22 | 4.3% (7/164) |

| Clinic B (Fukushima) | 203 km | ~24 hours | 19 | 0.24 | 11.3% (33/292) |

Problem: Batch Effects in Multi-Omics Data Integration

Symptoms: Apparent biological differences that actually correlate with processing date, sequencing batch, or laboratory site.

Potential Causes and Solutions:

- Cause: Technical variations between library prep, sequencing runs, or analysis pipelines [23] [5].

- Solution: For large-scale studies with incomplete data profiles, consider Batch-Effect Reduction Trees (BERT), which retains significantly more numeric values compared to other methods and efficiently handles covariates [24].

- Cause: Integration of datasets from different platforms or technologies with different distributions and scales [5].

Table 2: Comparison of Batch Effect Correction Methods for Different Data Types

| Method | Best For | Key Advantages | Limitations |

|---|---|---|---|

| Harmony [20] | scRNA-seq | Consistently performs without creating artifacts; preserves biological variation | - |

| ComBat-met [25] | DNA methylation data | Beta regression framework captures unique characteristics of methylation data | May not be optimal for other data types |

| cytoNorm & cyCombine [19] | High-parameter cytometry | Reduces variance in marker expression and population percentages | Effectiveness varies across cell populations |

| BERT [24] | Incomplete multi-omics profiles | Retains up to 5 orders of magnitude more values; handles covariates | - |

Problem: High Batch-to-Batch Variability in Allogeneic Cell Products

Symptoms: Inconsistent product quality between donors, difficulty in establishing reproducible manufacturing processes.

Potential Causes and Solutions:

- Cause: Biological variability between healthy donors used as starting material [21].

- Solution: Implement rigorous donor screening and establish master cell banks from selected donors to ensure consistency [21].

- Cause: Limited ability to return to the same donor repeatedly due to health changes or availability [21].

- Solution: Maintain a pool of qualified donors with comprehensive attribute data to support sustainability [21].

Experimental Protocols

Protocol: Assessing Batch Effects Using Bridge Samples in Longitudinal Studies

Purpose: To identify and monitor technical variations across multiple batches in long-term studies.

Materials:

- Aliquots of a stable control sample (e.g., PBMCs from a large leukopak) preserved for the study duration

- Standardized staining panels and reagents

- Consistent instrument acquisition settings

Procedure:

- Preparation: In each batch of the study, thaw and prepare one aliquot of the bridge sample alongside experimental samples using identical protocols [18].

- Acquisition: Run the bridge sample each time experimental samples are acquired, maintaining consistent instrument settings and quality control procedures [18].

- Analysis: Track the expression of key markers in the bridge sample over time using Levy-Jennings charts or similar visualization tools [18].

- Monitoring: Look for sudden shifts or gradual drifts in the bridge sample measurements that may indicate batch effects requiring correction [18].

Protocol: Automated Manufacturing to Reduce Human Intervention

Purpose: To minimize variability introduced by manual processing in cell therapy manufacturing.

Materials:

- Closed, automated cell processing systems (e.g., Miltenyi Prodigy, Lonza Cocoon)

- Single-use disposable sets

- Standardized reagent kits

Procedure:

- System Setup: Implement automated systems that integrate multiple unit operations (selection, activation, expansion) rather than modular equipment requiring manual transfers [22].

- Process Validation: Establish growth curves and metabolite profiles to create automated feeding schedules that adjust based on cell growth rates [21].

- In-process Monitoring: Incorporate analytical assays (cell counts, population doublings) to guide process adjustments without manual intervention [21].

- Closed Processing: Utilize closed systems to minimize contamination risk and reduce human error [22].

Visual Guide to Batch Effect Identification and Correction

Batch Effect Identification and Correction Workflow

Table 3: Key Research Reagent Solutions for Batch Effect Management

| Resource | Function | Application Example |

|---|---|---|

| Bridge/Anchor Samples | Consistent control sample across batches | PBMCs from large leukopak aliquoted for longitudinal studies [18] |

| Fluorescent Cell Barcoding | Labels individual samples for pooled staining | Eliminates staining and acquisition variability by processing samples together [18] |

| Automated Cell Processing Systems | Integrated, closed manufacturing | Reduces human intervention and variability in cell therapy production [22] |

| Standardized Apheresis Protocols | Consistent collection of starting material | Harmonizes procedures across multiple collection sites [21] |

| Quality Control Beads | Instrument performance verification | Ensures consistent detector response across acquisitions [18] |

| Master Cell Banks | Consistent starting material for allogeneic products | Provides reproducible donor material for multiple batches [21] |

| Batch Effect Correction Algorithms | Computational removal of technical variation | Harmony for scRNA-seq, cytoNorm for cytometry, BERT for multi-omics [20] [19] [24] |

Economic and Regulatory Consequences of Irreproducibility

What are the primary economic consequences of irreproducible results in autologous cell therapy research? Irreproducible results in autologous cell therapy manufacturing lead to substantial economic burdens, including escalated production costs and clinical delays. The Cost of Goods (COGs) for autologous cell therapies like CAR-T cells typically ranges from $100,000 to $300,000 per dose [26]. Batch failures caused by irreproducibility directly contribute to these exorbitant costs. Furthermore, the financial impact extends to clinical trial delays and holds—Chemistry, Manufacturing, and Controls (CMC) issues represent a disproportionately high cause of clinical holds placed on cell therapy trials by regulatory agencies like the FDA [26]. These delays increase development costs, which average $1.94 billion to bring a cell or gene therapy to market [26], ultimately limiting patient access to these transformative treatments.

How does batch-to-batch variability affect regulatory compliance? Batch-to-batch variability poses significant regulatory challenges by compromising the consistent quality, safety, and efficacy required for approval. Regulatory bodies including the FDA and EMA mandate that manufacturing processes demonstrate robust control and reproducibility [13] [6]. Inconsistent batches fail to meet the standards for Critical Quality Attributes (CQAs), which are essential for product release [13] [26]. This variability creates substantial hurdles in compiling the consistent data packages needed for regulatory submissions. The hospital exemption pathway for Advanced Therapy Medicinal Products (ATMPs) still requires adherence to quality standards equivalent to centralized manufacturing, making standardization and harmonization of Quality Control (QC) processes across academic production sites critically important [6].

Troubleshooting Common Irreproducibility Issues

What are the main technical sources of irreproducibility in autologous cell therapy manufacturing? The main technical sources stem from variability in both the starting biological material and the complex manufacturing process itself.

- Variable Starting Material: Autologous therapies begin with cells from patients, who exhibit significant biological variation in cell quality, potency, and characteristics [13] [26]. This inherent variability is challenging to control.

- Process-Related Variability: Manual, open processing steps are common in cell therapy manufacturing and introduce risks of contamination and operator-dependent variation [27] [15]. Furthermore, raw materials, reagents, and media can vary between lots, affecting cell expansion and final product characteristics [26].

- Analytical and QC Challenges: A major hurdle is the lack of robust, standardized potency assays and other QC tests. This makes it difficult to reliably compare batches and confirm consistent product function [13] [6].

What methodologies can correct for batch effects in analytical data? For different types of biological data, specific computational batch effect correction methods have been developed. The table below summarizes recommended methodologies for DNA methylation and image-based cell profiling data, which are used for product characterization.

Table 1: Batch Effect Correction Methods for Analytical Data

| Data Type | Recommended Method | Key Principle | Considerations |

|---|---|---|---|

| DNA Methylation (β-values) | ComBat-met [28] | Uses a beta regression framework tailored for proportional data (0-1). | Accounts for over-dispersion and skewness in β-value distributions; superior to methods assuming normal distributions. |

| Image-Based Cell Profiling (e.g., Cell Painting) | Harmony or Seurat RPCA [29] | Harmony uses mixture-model based correction; Seurat RPCA uses reciprocal PCA and mutual nearest neighbors. | Effectively reduces technical variation while preserving biological signals in high-content imaging data. |

How can I implement a standardized QC strategy to minimize irreproducibility? Implementing a harmonized QC strategy is essential for batch release. The following workflow, based on recommendations from the UNITC Consortium for academic CAR-T production, outlines the critical tests and methods [6].

Detailed QC Protocols:

Mycoplasma Detection:

- Method: Use validated commercial nucleic acid amplification tests (NAAT) as an alternative to the 28-day culture method.

- Validation: Even with commercially validated kits, perform local validation to confirm detection limits (sensitivity of at least 10 CFU/mL for recommended strains) and ensure compatibility with your specific equipment and sample matrices (cell suspensions/supernatants) [6].

Endotoxin Testing:

- Method: Use Limulus Amebocyte Lysate (LAL) or Recombinant Factor C (rFC) assays.

- Protocol: Validate the test protocol to prevent matrix interference from cell culture media or other product components, which can lead to false positives or negatives [6].

Vector Copy Number (VCN) Quantification:

- Method: Use quantitative PCR (qPCR) or droplet digital PCR (ddPCR).

- Standardization: The technique must be validated to ensure accurate and reproducible quantification of the number of viral vector integrations per cell genome, a critical safety attribute [6].

Potency Assay:

- Method: A key challenge for complex products like Tregs. A common approach involves measuring IFN-γ release via ELISA after antigenic stimulation.

- Considerations: For products with multiple mechanisms of action, developing a reliable potency assay that reflects the biological function is complex and may require multi-parameter analyses [30] [6].

The Scientist's Toolkit: Key Research Reagent Solutions

Using standardized, high-quality reagents is fundamental to reducing irreproducibility. The following table lists essential materials used in the field to establish robust manufacturing and QC processes.

Table 2: Essential Research Reagent Solutions for Cell Therapy Manufacturing

| Reagent / Material | Function | Application Example |

|---|---|---|

| GMP-grade Cell Culture Media & Supplements | Provides a consistent, xeno-free environment for cell expansion, reducing variability and contamination risk from animal sera. | Expansion of T-cells, MSCs, and iPSCs [26]. |

| Cytokines (e.g., IL-2, IL-7, IL-15) | Promotes specific cell expansion, survival, and influences final product phenotype during manufacturing. | Critical for T-cell and Treg culture [15]. |

| Magnetic Cell Sorting Beads | Isolates highly pure target cell populations (e.g., CD4+/CD25+ Tregs) from a heterogeneous starting sample, ensuring a consistent input for manufacturing. | Isolation of Tregs from leukapheresis material [30]. |

| Viral Vectors (e.g., Lentivirus) | Delivers genetic material for cell engineering (e.g., introducing CARs or TCRs). Consistency in vector production is critical. | Engineering CAR-T cells and antigen-specific Tregs [30] [15]. |

| CRISPR/Cas9 Components | Enables precise gene editing for knock-in, knock-out, or gene correction in allogeneic and autologous therapies. | Engineering enhanced specificity or safety features into cell products [15]. |

| qPCR/ddPCR Reagents & Assays | Quantifies Vector Copy Number (VCN) and other genetic attributes for QC batch release. | Quality control and safety testing of genetically modified cell products [6]. |

| ELISA Kits (e.g., IFN-γ) | Measures cytokine release as a functional readout for potency assays during QC testing. | Potency assessment for T-cell based therapies [6]. |

Advanced Solutions and Future Directions

What technological innovations can help overcome scalability and reproducibility challenges? The field is moving toward increased automation and data-driven process control to enhance reproducibility.

- Automation and Closed Systems: Implementing automated, closed-system bioreactors and processing equipment reduces manual handling, minimizes contamination risk, and improves process consistency [27] [26] [15]. This is crucial for scaling up allogeneic therapies and scaling out patient-specific autologous therapies.

- Process Analytical Technologies (PAT) and AI: Integrating inline sensors and advanced analytics allows for real-time monitoring of Critical Process Parameters (CPPs). Artificial Intelligence (AI) can use this data to enable adaptive process control, automatically adjusting conditions to maintain product CQAs and manage inherent input variability [13] [12].

- Quality by Design (QbD): Adopting QbD principles early in process development involves systematically defining a target product profile, identifying CQAs, and understanding the impact of CPPs on them. This science-based approach is key to developing a robust and reproducible manufacturing process [26].

The following diagram illustrates an integrated, automated workflow that represents the future state of reproducible cell therapy manufacturing.

Benchmarking and Applying Batch Correction Methods: From Omics to Imaging

In autologous cell products research, biological variation between patients is an inherent challenge. This variability is often compounded by technical "batch effects"—non-biological differences introduced when samples are processed in different batches, at different times, or by different personnel. If not corrected, these effects can confound results, leading to incorrect biological conclusions and challenges in process reproducibility. Computational batch correction provides a set of powerful statistical and algorithmic approaches to remove these technical artifacts, allowing for clearer insight into the underlying biology and more reliable comparison of data across experimental batches.

Troubleshooting Guide: Common Batch Correction Issues

FAQ 1: My data shows clear clustering by batch even after correction. What went wrong?

Problem: Batch effect correction methods have been applied, but the data still clusters strongly by technical batch rather than biological group in the visualization.

Investigation & Solutions:

- Verify the Correct Model Specification: Ensure that the batch variable you provided to the tool correctly identifies all technical batches. A common error is mislabeling samples or including a biologically distinct group as a "batch."

- Check for Strong Covariates: A strong biological signal (e.g., major differences between cell types or disease states) might be mistaken for a batch effect. Most batch correction tools allow you to specify a "model" or "covariates" parameter. You can include known biological variables of interest in the model to ensure the correction preserves these signals while removing the technical variance. The table below outlines core reagent solutions used in computational workflows.

Table 1: Key Research Reagent Solutions for Computational Batch Correction

| Item Name | Function / Explanation |

|---|---|

| pyComBat/pyComBat-Seq | A Python tool using empirical Bayes methods to adjust for batch effects in microarray (normal-distributed) and RNA-Seq (count-based) data, respectively. [31] |

| Harmony | An algorithm that iteratively corrects embeddings to integrate datasets, removing batch effects while preserving biological structure. [32] |

| Seurat Integration | A widely used toolkit in single-cell genomics that identifies "anchors" between datasets to enable integrated analysis and batch correction. [32] |

| Mutual Nearest Neighbors (MNN) | A method that identifies pairs of cells from different batches that are in a similar biological state, using them as a basis for correcting the data. [32] |

- Evaluate Alternative Methods: No single method works best for all data types. If one algorithm fails, try another. For instance, if a method designed for continuous, normalized data (like ComBat) fails on raw count data from RNA-Seq, switch to a method designed for counts (like ComBat-Seq). [31]

- Assess Data Preprocessing: Inconsistent normalization across batches can create effects that are difficult to correct. Ensure all batches were preprocessed (e.g., normalized, scaled) using the same pipeline before applying batch correction.

FAQ 2: After correction, my biological signal seems to have been removed. How can I preserve it?

Problem: The correction was too aggressive and has removed or dampened the biological variation of interest.

Investigation & Solutions:

- Use Covariates of Interest: As mentioned in FAQ 1, explicitly model the biological variable you wish to preserve (e.g.,

model = ~ biological_groupin ComBat). This instructs the algorithm to remove variance associated with the batch while protecting variance associated with your biological group. [31] - Validate with Known Biological Markers: After correction, check the expression levels of well-established biological markers for your system. If these markers no longer differentiate between groups, the correction may have been too strong.

- Choose a Less Aggressive Method: Some methods are inherently more conservative. For example, Harmony and Seurat Integration are designed to be more robust to the removal of biological signal compared to some earlier methods. [32] A study on deep learning features found ComBat successfully removed technical batch effects while retaining predictive signals for key genetic features like MSI status. [33]

FAQ 3: How do I handle multiple batch effects or complex experimental designs?

Problem: My experiment involves multiple, overlapping technical variables (e.g., different processing days and different sequencing lanes).

Investigation & Solutions:

- Consolidate Batches: If possible, define a single batch variable that is a combination of all major technical factors (e.g., "Day1Lane1", "Day1Lane2", "Day2_Lane1"). This is the simplest approach for many algorithms.

- Use Nested or Blocked Designs: Advanced users can employ more complex statistical models that can account for nested designs (e.g., sequencing lanes nested within processing days). This may require custom modeling frameworks beyond standard out-of-the-box tools.

FAQ 4: How can I quantitatively assess the success of my batch correction?

Problem: It's unclear whether the batch correction has been effective.

Investigation & Solutions:

- Visual Inspection: Use Principal Component Analysis (PCA) or t-Distributed Stochastic Neighbor Embedding (t-SNE) plots colored by batch and biological group. Successful correction is indicated by the mixing of batches while the separation of biological groups is maintained.

- Calculate Metrics: Use quantitative metrics to assess batch mixing.

- Principal Component Regression: Regress the first few principal components against the batch variable. A reduction in R-squared value post-correction indicates less variance is explained by batch.

- Local Inverse Simpson's Index (LISI): A metric that quantifies the diversity of batches within local neighborhoods of cells or samples. A higher LISI score after correction indicates better batch mixing. [32]

- Silhouette Score: Measures how similar a sample is to its own biological cluster compared to other clusters. This should be high for biological groups and low for batches after correction.

Table 2: Performance Comparison of Select Batch Correction Tools

| Tool Name | Primary Data Type | Key Methodology | Reported Performance |

|---|---|---|---|

| pyComBat (Parametric) [31] | Microarray (Normal) | Empirical Bayes | ✓ Correction efficacy similar to original ComBat✓ 4-5x faster computation time vs. R ComBat |

| pyComBat-Seq [31] | RNA-Seq (Counts) | Empirical Bayes (Negative Binomial) | ✓ Outputs identical adjusted counts to R ComBat-Seq✓ 4-5x faster computation time |

| Harmony [32] | Single-cell Genomics | Iterative clustering & integration | ✓ Effective for small sample sizes & >2 batches✓ Designed to preserve biological variance |

| Seurat Integration [32] | Single-cell Genomics | Mutual Nearest Neighbors (MNN) variant | ✓ Effective for complex single-cell datasets✓ Widely adopted with extensive community support |

Experimental Protocol: A Standard Workflow for Batch Correction with ComBat

This protocol provides a detailed methodology for applying the ComBat algorithm to a gene expression matrix, as validated in performance benchmarks. [31]

Objective: To remove technical batch effects from a combined gene expression dataset comprising multiple batches, enabling integrated downstream analysis.

Materials:

- Software: Python with the

inmoosepackage installed, or R with thesvapackage. - Input Data: A combined gene expression matrix (genes/features x samples) with associated metadata. Data should be preprocessed and normalized consistently across all batches. For microarray data, this is typically log2-transformed intensity values. For RNA-Seq raw counts, use ComBat-Seq.

Procedure:

Data Preparation and Import:

- Format your data into a single expression matrix. Ensure row names are gene identifiers and column names are sample IDs.

- Create a batch information vector where each element corresponds to the batch of the sample in the respective column of the expression matrix.

- (Optional) Create a model matrix containing any biological covariates of interest you wish to preserve.

Algorithm Execution:

In Python using

inmoose:The function will output a new, batch-corrected expression matrix of the same dimensions as the input.

Post-Correction Validation:

- Perform PCA on the corrected matrix.

- Visualize the results by plotting the first two principal components, coloring the points by both batch and biological group.

- Calculate quantitative mixing metrics like LISI or silhouette scores to compare pre- and post-correction data.

Downstream Analysis:

- Proceed with your biological analysis (e.g., differential expression, clustering) using the batch-corrected matrix.

Visualizing the Batch Correction Workflow

The following diagram illustrates the logical flow of a standard batch correction process, from raw data to validated output.

Batch Correction Process

Tool Selection Logic

Choosing the right tool is critical. The following diagram provides a logical pathway for selecting an appropriate batch correction method based on your data type and research context.

Tool Selection Guide

In the development of autologous cell products, such as Treg cell therapies, managing batch variation is a critical challenge. The manufacturing process starts with patient-specific cells, leading to inherent biological and technical variability across batches [30]. Single-cell genomics has become indispensable for characterizing these complex products, but its analysis relies heavily on computational data integration methods to separate true biological signals from unwanted technical noise. This guide benchmarks three leading tools—Harmony, Seurat, and scVI—providing practical troubleshooting advice to ensure reliable analysis in cell therapy research and development.

Independent benchmarking studies provide crucial insights for method selection. The table below summarizes key performance metrics across different data integration tasks, helping you choose the right tool for your specific challenge in autologous cell product research [34].

| Method | Best For | Batch Removal Metrics | Bio-Conservation Metrics | Scalability | Key Strengths |

|---|---|---|---|---|---|

| Harmony | simpler tasks, scATAC-seq | Good kBET, iLISI | Moderate isolated label conservation | Fast | Fast, good for simpler batch effects [34] |

| Seurat v3 | simpler tasks, multi-omics | Good on simple tasks | Good ARI, NMI | Moderate | Versatile, multiple integration options [34] [35] |

| scVI | complex atlases, large datasets | Excellent on complex tasks | Excellent trajectory conservation | Excellent for large N | Scalable, handles complex nested batches [34] [36] |

| Scanorama | complex RNA-seq tasks | High kBET & iLISI | High trajectory conservation | Good | Robust performance on complex RNA-seq [34] |

| scANVI | annotation-assisted tasks | Excellent with labels | Excellent with labels | Good | Leverages prior knowledge when available [34] |

Table 1: Benchmarking performance of major single-cell data integration methods across various integration tasks and data modalities.

Troubleshooting Common Integration Failures

FAQ 1: The integration did not remove the batch effect. Several clusters still contain cells from only one study.

Problem: Your UMAP shows distinct clusters comprised solely of cells from a single batch or study, indicating failed integration.

Solutions:

- Verify

batch_keyParameter (scVI): The batch key must correctly identify all sources of technical variation. For a complex design with multiple individuals and conditions, create a new combined batch key (e.g.,individual_condition) instead of using a single factor like 'condition' [37]. - Adjust Harmony Iterations: Increase the

max.iter.harmonyparameter beyond the default to allow the algorithm more time to converge. This addresses the "did not converge" warning [36] [38]. - Try a More Powerful Method: For large or complex datasets with strong batch effects, consider switching to a more robust method like scVI, which often handles significant technical variation better [34] [36].

- Check Preprocessing: Ensure proper normalization and high-variable gene selection before integration, as this can significantly impact the performance of most methods [34].

FAQ 2: The integration removed too much variation, and my biological signal of interest is lost.

Problem: After integration, cell types or experimental conditions that should be distinct are artificially merged, suggesting over-correction.

Solutions:

- Re-evaluate Biology vs. Batch: If your "batch" is a strong biological condition (e.g., stimulated vs. unstimulated), complete separation in UMAP may be biologically real, not a technical artifact. Do not force integration in this case [37].

- Inspect HVG Selection: Highly variable gene (HVG) selection improves integration. Re-running the analysis with a focused set of HVGs can help preserve relevant biological variance [34].

- Use a Less Aggressive Method: Methods can vary in their propensity to over-correct. If using a strong deep learning model like scVI, try a lighter method like Scanorama or Harmony, which may offer a better balance [34].

- Leverage Label-Guided Integration: If cell-type annotations are available, use a method like scANVI that can use labels to guide integration and explicitly preserve known biological structures [34].

FAQ 3: The tool is slow or fails to run due to memory issues on my large dataset.

Problem: The integration process takes an impractically long time or crashes, often with memory errors, when analyzing datasets with hundreds of thousands of cells.

Solutions:

- Utilize Scalable Methods: For very large datasets (e.g., >200,000 cells), scVI is specifically designed for scalability and is often the most efficient choice [34] [39].

- Optimize Seurat's Workflow: When using Seurat, employ the reciprocal PCA (rPCA) workflow, which is faster and more memory-efficient than the standard CCA workflow, especially for large datasets [39].

- Adjust scVI Parameters: With 700k cells, the default of 10 latent variables (n_latent) is typically sufficient. Increasing this number is not usually necessary for batch correction and will increase computational load [37].

Essential Experimental Protocols

Standardized Integration Workflow

To ensure reproducible and comparable results, follow this generalized workflow before applying any specific integration method.

Diagram 1: Standard pre-integration workflow.

Protocol: Preprocessing for Single-Cell Data Integration

Quality Control & Filtering:

- Filter out cells with high mitochondrial gene percentage (indicates low viability).

- Remove cells with an abnormally low or high number of detected genes or UMIs.

- Filter out potential doublets based on unusually high gene/UMI counts.

Normalization:

- Apply a normalization method to correct for differences in sequencing depth between cells (e.g., LogNormalize in Seurat, or SCTransform for a more advanced approach).

Feature Selection:

- Select 2,000-5,000 Highly Variable Genes (HVGs) for downstream analysis. This focuses the integration on the most biologically relevant features and improves performance for most methods [34].

Scaling and Dimensionality Reduction:

- Scale the data to regress out the influence of technical factors like UMI count (if applicable).

- Perform Principal Component Analysis (PCA) on the scaled HVGs to obtain a low-dimensional representation of the data. This PCA matrix is the direct input for many integration methods like Harmony.

Protocol: Application of Key Integration Methods

Harmony in R (Post-PCA)

Code 1: Running Harmony integration in R.

scVI in Python (Uses Raw Counts)

Code 2: Running scVI integration in Python.

The Scientist's Toolkit: Research Reagent Solutions

The table below lists key computational "reagents" and their functions in the data integration process, crucial for ensuring the consistency and quality of your analysis, much like wet-lab reagents in cell therapy manufacturing.

| Tool / Resource | Function | Role in Experimental Pipeline |

|---|---|---|

| Seurat (R) | Comprehensive toolkit for single-cell analysis. | Primary environment for data preprocessing, analysis, and visualization; runs Harmony. |

| scvi-tools (Python) | Deep learning library for single-cell omics. | Runs scVI and scANVI for scalable, powerful integration, especially on large datasets. |

| Harmony (R/Python) | Fast, linear integration algorithm. | Efficiently integrates datasets after PCA, often via Seurat's RunHarmony function. |

| Highly Variable Genes (HVGs) | A selected subset of informative genes. | Critical preprocessing step that improves integration performance by reducing noise [34]. |

| Principal Component Analysis (PCA) | Linear dimensionality reduction technique. | Creates the low-dimensional representation required as input for methods like Harmony. |

| kBET / LISI Metrics | Quantitative batch effect evaluation. | Calculates metrics to objectively assess integration quality, beyond visual inspection [34]. |

Table 2: Essential computational tools and resources for single-cell data integration.

Advanced Troubleshooting Guide

When standard fixes fail, this decision diagram helps systematically diagnose and resolve persistent integration issues.

Diagram 2: Troubleshooting workflow for failed integrations.

Applying Correction Methods to Image-Based Cell Profiling Data

In autologous cell products research, ensuring consistent and reliable data is paramount. A significant challenge in this field is batch variation, where technical differences between experiments can obscure true biological signals. This technical support center provides guides and FAQs on applying correction methods to image-based cell profiling data, a critical step for validating the quality and consistency of your autologous cell therapies.

Frequently Asked Questions (FAQs)

1. What are batch effects and why are they a problem in image-based profiling? Batch effects are technical variations in data not due to the biological variables being studied. They can arise from differences in reagent lots, processing times, equipment calibration, or experimental platforms. In image-based profiling, particularly with assays like Cell Painting, these effects severely limit the ability to integrate and interpret data collected across different laboratories and equipment, potentially leading to incorrect biological conclusions [29].

2. Which batch correction methods are most effective for Cell Painting data? Recent benchmarks using the JUMP Cell Painting dataset found that methods like Harmony and Seurat RPCA consistently rank among the top performers across various scenarios. These methods effectively reduce batch effects while conserving biological variance. The best method can depend on your specific experimental design and the complexity of the batch effects [29].

3. My CellProfiler pipeline is detecting small spots inconsistently in batch mode. What should I check? This is a common segmentation challenge. Potential solutions include:

- Fixed Coordinates: If using a crop module, use fixed coordinates for each image to ensure the same region is analyzed, provided all plates are imaged without the camera or stage moving.

- Adaptive Thresholding: Use adaptive thresholding in the IdentifyPrimaryObjects module to better detect dimmer spots, though this may also pick up some non-relevant spots.

- Image Artifacts: Check for and minimize any illuminating light that causes reflections, as this can significantly interfere with image processing [40].

4. How can I improve my profiles before applying batch correction? Data cleaning is a crucial preprocessing step. Key strategies include:

- Cell-level outlier detection: Remove outlier cells that do not show valid biological effects, often resulting from segmentation errors.

- Regress out cell area: Neutralize the effect of cell area on other features, as it is a pivotal contributor that can overly influence similarity metrics.

- Remove toxic drugs: Filter out wells with an extraordinarily low number of cells. Applying these steps can help preserve more meaningful biological information in your profiles [41].

Troubleshooting Guides

Guide 1: Benchmarking Batch Correction Methods

Problem: A researcher needs to choose an appropriate batch correction method to integrate Cell Painting data from multiple laboratories using different microscopes.

Solution: Follow this benchmarked protocol to select and apply a high-performing method.

Experimental Protocol:

- Data Preparation: Start with population-averaged well-level profiles. These are computed by mean-averaging the morphological feature vectors for all cells in a well [29].

- Method Selection: The table below summarizes high-performing methods based on a recent benchmark study. Harmony and Seurat RPCA are recommended starting points due to their consistent performance and computational efficiency [29].

- Application: Apply the chosen method using standard software packages (e.g., in R or Python). Note that methods like

fastMNN,MNN,Scanorama, andHarmonyrequire recomputing batch correction across the entire dataset when new profiles are added [29]. - Evaluation: Use a combination of metrics to evaluate success:

- Batch Effect Reduction: Assess the mixing of batches in low-dimensional embeddings.

- Biological Signal Preservation: Evaluate performance on a biological task, such as replicate retrieval (finding the replicate sample of a given compound across batches) [29].

Table 1: Benchmarking of Selected Batch Correction Methods

| Method Name | Underlying Approach | Key Requirements | Notable Characteristics |

|---|---|---|---|

| Harmony | Mixture model | Batch labels | Iterative, removes batch effects within clusters of cells; consistently high rank [29] |

| Seurat RPCA | Nearest neighbors | Batch labels | Uses reciprocal PCA; allows for dataset heterogeneity; fast for large datasets [29] |

| Combat | Linear model | Batch labels | Models batch effects as additive/multiplicative noise; can be applied to new data [29] |

| scVI | Neural network | Batch labels | Uses a variational autoencoder; does not require full recomputation for new data [29] |

| Sphering | Linear transformation | Negative controls | Computes a whitening transformation based on negative control samples [29] |

Guide 2: Preprocessing and Data Cleaning for Profile Enhancement

Problem: Morphological profiles are noisy, leading to poor performance in downstream tasks like predicting a drug's mechanism of action.

Solution: Implement a data cleaning pipeline to enhance profile quality before any downstream analysis.

Experimental Protocol:

- Illumination Correction: Apply a retrospective multi-image correction method. This builds a correction function using all images from an experiment (e.g., per plate) to recover true image intensities, which is crucial for accurate segmentation and feature measurement [42].

- Cell-level Outlier Detection: Detect and remove outlier cells using an unsupervised method like Histogram-Based Outlier Score (HBOS). This helps eliminate cells that may bias aggregated well-level profiles, for instance, due to segmentation errors creating overly large or small cells [41].

- Regress Out Cell Area: The cell area feature heavily influences many other morphological features. To capture more meaningful biological information, regress each feature against the cell area and use the residuals for downstream analysis [41].

- Filter Non-informative Compounds: Remove "outlier drugs" or compounds that do not produce a meaningful change in the features. This can be done by selecting only compounds whose median replicate correlations are greater than the 95th percentile of a null distribution formed from non-replicate correlations [41].

Table 2: Key Data Cleaning Steps for Profile Enhancement

| Processing Step | Function | Recommended Tool/Method |

|---|---|---|

| Illumination Correction | Corrects for uneven lighting in raw images | Retrospective multi-image method [42] |

| Outlier Cell Removal | Removes cells that are artifacts of segmentation or other errors | Histogram-Based Outlier Score (HBOS) [41] |

| Cell Area Regression | Neutralizes the dominant effect of cell size on other features | Linear regression (using residuals) [41] |

| Non-informative Compound Filtering | Filters out treatments with no discernible biological effect | Replicate correlation analysis [41] |

The following workflow diagram illustrates how these data cleaning steps integrate into a broader image-based profiling pipeline.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for Image-Based Cell Profiling Experiments

| Item | Function |

|---|---|

| Cell Painting Assay Kits | Provides a standardized set of fluorescent dyes to label eight cellular components (nucleus, nucleolus, ER, Golgi, mitochondria, plasma membrane, cytoplasm, cytoskeleton), enabling rich morphological profiling [29]. |

| High-Throughput Microscopy Systems | Automated microscopes for acquiring thousands of images from multi-well plates in a time- and cost-effective manner [42]. |

| CellProfiler Software | Open-source software for automated image analysis, including illumination correction, segmentation, and feature extraction [29] [41]. |

| Negative Control (e.g., DMSO) | A vehicle-control treatment that does not induce morphological changes. Essential for normalization and for methods like Sphering that require control samples to model technical variation [29] [41]. |

| Automated Cell Counter | Provides accurate and consistent cell counts and viability assessments during cell seeding, reducing a key source of human error [43]. |

| Electronic Lab Notebook (ELN) | A platform to structure data entry, manage equipment calibration, and automate workflows, thereby reducing transcriptional and decision-making errors [44]. |

Integrating Correction into the Autologous Cell Production Workflow

Troubleshooting Guide: Addressing Common Production Issues

FAQ 1: What are the primary sources of batch-to-batch variation in autologous cell therapies, and how can they be controlled?

Batch-to-batch variation is a significant challenge in autologous cell therapy manufacturing. The main sources and their control strategies are summarized in the table below.

Table 1: Key Sources and Control Strategies for Batch-to-Batch Variation

| Source of Variability | Impact on Production | Corrective and Control Strategies |

|---|---|---|

| Patient-specific Factors (Disease severity, prior treatments, age, health status) [2] | Affects initial cell quality, quantity, and functionality; influences expansion potential and final product yield [2] [45]. | Implement stringent patient eligibility criteria [2]. Design flexible manufacturing processes that can accommodate variable growth kinetics [2] [46]. |

| Apheresis Collection (Different protocols, devices, operator training, anticoagulants) [2] | Leads to differences in the composition, viability, and purity of the starting cellular material [2] [14]. | Standardize apheresis protocols and operator training across collection sites [2]. Specify the use of a particular apheresis collection device to increase consistency [2]. |

| Raw Material Variability (Cell culture media, cytokines, activation beads) [2] [47] | Can alter cell expansion, differentiation, and final product phenotype [2]. | Implement robust quality control for raw materials [15]. Use a risk-based approach to define Critical Quality Attributes (CQAs) for starting materials [2]. |

| Manual, Open Process Steps [46] | Increases risk of contamination and human error, leading to inconsistencies and batch failures [46]. | Adopt closed and automated manufacturing systems to reduce manual touchpoints [46]. |

FAQ 2: Our process frequently generates out-of-specification (OOS) products. What steps can we take to reduce this occurrence and what are the regulatory considerations for using OOS products?

The generation of OOS products is a recognized challenge in autologous therapies, primarily due to the inherent variability of patient-derived starting materials [48]. In some cases, for patients with no alternative treatment options, OOS products may be used on compassionate grounds following a thorough risk-benefit assessment [48].

Corrective Actions to Minimize OOS Rates:

- Process Understanding: Intentionally introduce donor variability during process development to understand which CQAs are truly indicative of manufacturing outcomes [2].

- In-process Controls: Implement process analytical technologies (PATs) that provide real-time data for tighter process control [2]. Conduct in-process quality checks for quicker decision-making [2].

- Process Automation: Automate critical steps like cell isolation and expansion to enhance consistency and reduce failures caused by contamination [46].

Regulatory Considerations for OOS Use:

- In the US: OOS products may be supplied under an Expanded Access Program (EAP) following an FDA-reviewed Investigational New Drug (IND) application, IRB approval, and patient consent [48].

- In Europe: OOS Advanced Therapy Medicinal Products (ATMPs) can be provided as commercial products under exceptional circumstances. The MAH must perform a risk assessment, and the treating physician makes the final decision after informing the patient [48].

- Documentation: Meticulous documentation of the risk assessment and justification for OOS product use is mandatory [48].

Experimental Protocols for Process Control

Detailed Methodology: Conducting a Comparability Study for a Process Change

When integrating a corrective action or process improvement (e.g., new raw material, automated equipment), a comparability study is essential to demonstrate the change does not adversely impact the product [47].

Objective: To demonstrate that the cell therapy product manufactured after a process change is comparable to the product manufactured before the change in terms of critical quality attributes (CQAs), safety, and efficacy.

Protocol:

Risk Assessment and Study Design:

Sample Manufacturing:

- Manufacture multiple batches (as feasible given autologous batch size limitations) using both the old and new processes.

- Acknowledge the inherent donor variability. Use a sufficient number of donor samples representing a range of expected variability (e.g., from healthy and "exhausted" donors) to ensure the process is robust [2] [47].

Analytical Testing and Data Collection:

- Test pre- and post-change products against a panel of CQAs. The table below outlines a recommended analytical framework.

Table 2: Analytical Framework for Comparability Studies

| Quality Attribute Category | Specific Test Metrics | Brief Explanation of Function |

|---|---|---|

| Identity & Purity | Flow cytometry for cell surface markers (e.g., CD3, CD4, CD8, CAR-positive %) [15] [45] | Confirms the identity of the cell population and the proportion of successfully engineered cells. |

| Potency | In vitro cytotoxicity assays, cytokine secretion profiling [15] | Measures the biological activity and therapeutic function of the product. |

| Viability | Cell count and viability (e.g., via trypan blue exclusion) [2] [15] | Assesses the health and proportion of live cells in the final product. |

| Safety | Sterility, mycoplasma, endotoxin testing [15] | Ensures the product is free from microbial contamination. |

| Characterization | T-cell immunophenotyping (e.g., naïve, memory, effector subsets) [45] | Provides deeper insight into the cell composition, which can impact persistence and efficacy. |

- Data Analysis and Conclusion:

- Use statistical methods where appropriate to analyze the data.

- Define pre-established acceptance criteria for comparability. If the results fall within these criteria, the products are considered comparable.

- If CQAs are not comparable, a root cause investigation must be conducted, and a clinical bridging study may be required [47].

Visualization of Workflow and Correction Integration

The following diagram illustrates a standard autologous cell therapy manufacturing workflow with key decision points where corrective actions and controls should be integrated to manage variability.

The Scientist's Toolkit: Key Research Reagent Solutions

This table details essential materials and technologies used in autologous cell therapy manufacturing to ensure process consistency and control.

Table 3: Essential Reagents and Technologies for Process Control

| Item / Technology | Function in the Workflow | Key Consideration for Reducing Variation |

|---|---|---|

| Magnetic Cell Sorting(e.g., MACS Beads) | Isolation of target T-cell populations (e.g., CD4+/CD8+) from apheresis material [15] [45]. | Using consistent bead-to-cell ratios and isolation techniques improves purity and yield of the starting population, reducing downstream variability [45]. |

| Cell Activation Reagents(e.g., anti-CD3/CD28 beads) | Activates T-cells, initiating the proliferation and manufacturing process [15]. | Standardizing the source, concentration, and duration of stimulation is critical for consistent activation and expansion [15]. |

| Genetic Modification(Viral Vectors: Lentivirus, Retrovirus) | Introduces the therapeutic CAR gene into the patient's T-cells [45]. | Vector quality, titer, and transduction efficiency (MOI) are major sources of variation. Rigorous quality control of vectors is essential [47]. |

| Cell Culture Media & Cytokines(e.g., IL-2, IL-7, IL-15) | Supports ex vivo cell expansion and influences final T-cell phenotype [15] [45]. | Media formulation and cytokine cocktail can drive differentiation towards desired memory phenotypes. Sourcing from qualified suppliers and avoiding formulation changes is key [2] [45]. |

| Closed AutomatedBioreactor Systems | Provides a controlled, scalable environment for cell expansion [46]. | Replaces manual, open processes in flasks/bags, minimizing contamination risk and human error, thereby improving batch consistency [46]. |

| Cryopreservation Media(e.g., with DMSO) | Protects cells during freezing for storage and transport [15]. | Standardized freezing protocols and cryopreservation media are vital for maintaining consistent post-thaw viability and function [2] [15]. |

Troubleshooting Manufacturing Variability and Implementing Process Controls

Identifying and Mitigating Critical Process Parameters

Troubleshooting Guides

Guide 1: Addressing High Batch-to-Batch Variability in Autologous Cell Products

Problem: Significant variation in final product quality and yield between different patient batches.

Symptoms:

- Inconsistent cell expansion rates

- Fluctuations in critical quality attributes (CQAs)

- Variable potency and viability measurements

Root Causes & Solutions:

| Root Cause | Diagnostic Methods | Corrective Actions |

|---|---|---|

| Patient-to-patient variability in starting material [2] | - Pre-apheresis CD3+ cell counts- Patient treatment history analysis- Cell viability assessment | - Implement stringent donor screening [49]- Establish patient eligibility criteria [2] |

| Inconsistent apheresis collection [2] | - Review collection protocols- Analyze anticoagulant variations- Assess collection device types | - Standardize operator training [2]- Specify collection devices [2] |

| Raw material variability [49] | - Functional release assays- Lot-to-lift purity testing- Endotoxin monitoring | - Early raw material specification locking [49]- Quality secondary suppliers [49] |

Preventive Measures:

- Implement Design of Experiments (DoE) studies to clarify acceptable input variation ranges [49]

- Establish real-time phenotyping for incoming materials [49]

- Develop flexible automated systems designed to accommodate variable growth kinetics [2]

Guide 2: Managing Raw Material Variability in Autologous Therapies

Problem: Inconsistencies in raw materials leading to irreproducible process outcomes.

Symptoms:

- Irreproducible proof-of-concept data when switching to GMP-grade reagents

- Unpredictable expansion kinetics

- Batch-to-batch potency swings

Investigation Protocol:

- Material Characterization: Test multiple lots of raw materials for functional performance

- Process Robustness: Challenge your process with intentionally variable inputs [2]

- Analytical Development: Implement functional release assays rather than compositional testing alone [49]

Mitigation Strategies:

- Establish well-defined supply chain strategies early in development [49]

- Use process analytical technologies for real-time data and tighter process control [2]

- Implement a hybrid analytical matrix approach to better understand product characteristics [2]

Critical Process Parameters and Quality Attributes Monitoring

| Process Parameter Category | Specific Parameters | Monitoring Frequency | Impact on CQAs |

|---|---|---|---|

| Physiochemical Properties | pH, Dissolved Oxygen (DO) | Continuous monitoring | Cell viability, metabolic activity, differentiation potential |

| Nutrient Supply | Glucose, lactate, amino acid concentrations | Daily sampling | Cell expansion rates, volumetric productivity |

| Cultivation System | Bioreactor type, media composition, microcarrier selection | Beginning/end of process | Immunophenotype, genetic stability, purity |

| Process Control | Agitation rate, temperature, feeding strategies | Throughout expansion | Cell number, viability, batch-to-batch consistency |

| Quality Attribute Category | Specific Measurements | Acceptance Criteria | Testing Frequency |

|---|---|---|---|

| Cell Growth & Viability | Cell count, viability, doubling time | Viability >70-80% (varies by product) | Throughout process |

| Identity/Purity | Immunophenotype (CD105, CD73, CD90), lack of hematopoietic markers | Meet ISCT criteria [50] | Final product release |

| Potency | Differentiation potential, biological activity assays | Differentiation to osteoblasts, adipocytes, chondroblasts [50] | Final product and in-process |

| Safety | Sterility, mycoplasma, endotoxins, genetic stability | Sterility: no growth; Endotoxins: <5 EU/kg [51] | Final product release |

Experimental Protocols

Protocol 1: Process Characterization Using Design of Experiments (DoE)

Purpose: To systematically map critical process parameters and set defensible operating ranges [49].

Materials:

- Research-grade and GMP-grade raw materials for comparison

- Cells from multiple donors to capture variability

- Bioreactor system with monitoring capabilities

Methodology:

- Define Factors: Identify potentially critical process parameters (e.g., seeding density, media composition, feeding schedules)

- Establish Ranges: Set minimum and maximum values for each parameter based on preliminary data

- Run Experiments: Execute DoE matrix, measuring all relevant CQAs as responses

- Statistical Analysis: Identify significant factors and interactions using statistical software